In the final weeks of 2023, major social media platforms have made a range of changes to their Community Guidelines. This peak of policy changes represents the highest period of activity registered by the Platform Governance Archive in the last year. The following blog post gives an overview of the changes that were picked up by our system.

In the weeks between the end of November and the end of the year, Meta changed Facebook’s and Instagram’s Community Guidelines a total of seven times and altered provisions in twelve different topical areas such as “Child sexual exploitation”, “Adult sexual exploitation”, “Coordinating Harm and Promoting Crime”, “Violent and Graphic Content”, “Misinformation”, “Violence and Incitement”, “Bullying and Harassment”, “Human Exploitation”, “Hate Speech”, “Sexual Solicitation”, “Dangerous Organizations and Individuals” and “Suicide, Self Injury, and Eating Disorders”.

Exception for breastfeeding and new definition of prostitution

On 27 November, Meta widened the section addressing the sexual exploitation of children to include more examples of problematic content, whilst adding a new exception for content in “the context of breastfeeding”.

The section on “Adult Sexual Exploitation” was also reformulated. Whereas before it prohibited content offering or asking for “adult commercial services”, it now centers on what Meta defines as “prostitution”, which they understand as “offering oneself or asking for sexual activities in exchange for money or anything of value”.

New provisions on outing people and other harmful activities

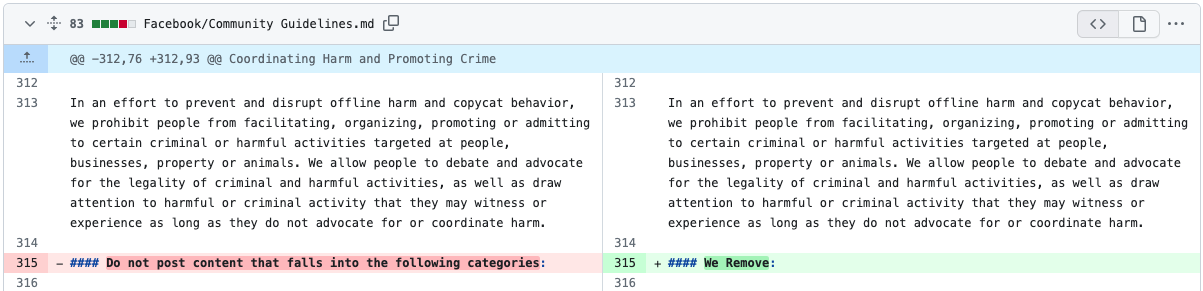

One day later, Meta changed the introductory sentence of the section on “Coordinating Harm and Promoting Crime” from “Do not post” to “We remove” and specified the provisions on “Outing the undercover status of law enforcement, military, or security personnel”.

Change in the section “Coordinating Harm and Promoting Crime” as tracked by the Platform Governance Archive

The section on outing people in general was widened to also address “Witnesses, informants, activists, detained persons or hostages” and “Defectors, when reported by credible government channel”.

New exceptions were added in the sections on swatting, harm against animals and harm against property, as well as in the section on “Voter and/or census fraud” which was extended with an exception for “condemning, awareness raising, news reporting, or humorous or satirical contexts.”

New provision on high-risk drugs

On 30 November, Meta added a provision on “High-risk drugs” to its Community Guidelines which are defined as “drugs that have a high potential for misuse, addiction, or are associated with serious health risks, including overdose; e.g., cocaine, fentanyl, heroin”. The new rule prohibits content related to trade of such drugs as well as positive accounts of personal use.

Widening of provision on photos of wounded or dead people

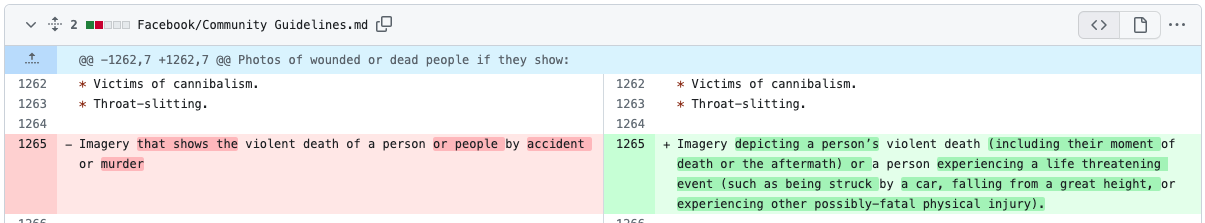

On the same day, Meta extended their provision on “Photos of wounded or dead people” which prohibited “Imagery that shows the violent death of a person or people by accident or murder“. The provision was widened significantly and now covers all “Imagery depicting a person’s violent death (including their moment of death or the aftermath) or a person experiencing a life threatening event (such as being struck by a car, falling from a great height, or experiencing other possibly-fatal physical injury).”

Change in the section “Violent and Graphic Content” as tracked by the Platform Governance Archive

AI-generated content added

On 5 December, Meta specifically added “AI-generated content” to the scope of its Community Guidelines. It now starts with the sentence that “Our Community Standards apply to everyone, all around the world, and to all types of content, including AI-generated content.”

The section addressing misinformation related to “Voter or Census Interference” was extended by an extra case which are “False claims about current conditions at a U.S. voting location that would make it impossible to vote, as verified by an election authority.”

From “offline harm” to “offline violence”

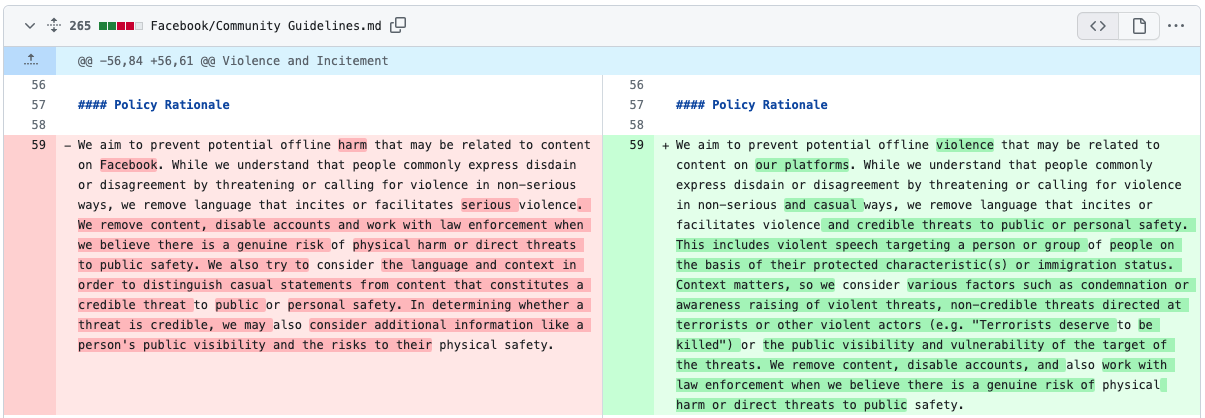

In another change, Meta altered the objective of its section on “Violence and Incitement” from “We aim to prevent offline harm” to “We aim to prevent offline violence” which could be interpreted as a narrowing of the scope of the provision.

At the same time, however, the section was widened by removing the “serious” from the sentence “we remove language that incites or facilitates serious violence” and changing it to “we remove language that incites or facilitates violence and credible threats to public or personal safety”.

Changes in the section “Violence and Incitement” as tracked by the Platform Governance Archive

The whole section underwent significant changes, among them the addition of “universal protections for everyone” from “high-severity violence”.

Threads added to Community Guidelines

In the section on “Bullying and Harassment”, Meta added Instagram and Threads to the scope of applicability which highlights the unified approach that the company is taking for the three platforms’ Community Guidelines. The formulation “victim” of bullying and harassment was changed to “target”.

The section on “Hate Speech” was also changed with a new exception for “Expressions of contempt (…) in a romantic break-up context” and various other changes.

Widening of section on dangerous organizations and individuals

On 30 December, Meta closed the year by overhauling the section on “Dangerous Organizations and Individuals” by changing the formulation “We remove praise, substantive support, and representation of Tier 1 entities as well as their leaders, founders, or prominent members” to “We remove Glorification, Support, and Representation of Tier 1 entities, their leaders, founders or prominent members, as well as unclear references to them.” Tier 1 entities are defined as “entities that engage in serious offline harms”.

The new categories of “Violence Inducing Entities”, “Violence Inducing Conspiracy Network” and “Hate Banned Entity” were also introduced to the section and defined in detail.

Furthermore, the section on “Suicide and Self Injury” was changed to now specifically address eating disorders and renamed as “Suicide, Self Injury, and Eating Disorders”.

This post is based on data curated by the Platform Governance Archive (PGA), collected with Open Terms Archive. The full data set as well as options to monitor and track changes are available here.